MTH2002 Coding Theory - Cheat Sheet

Made by William Fayers :)

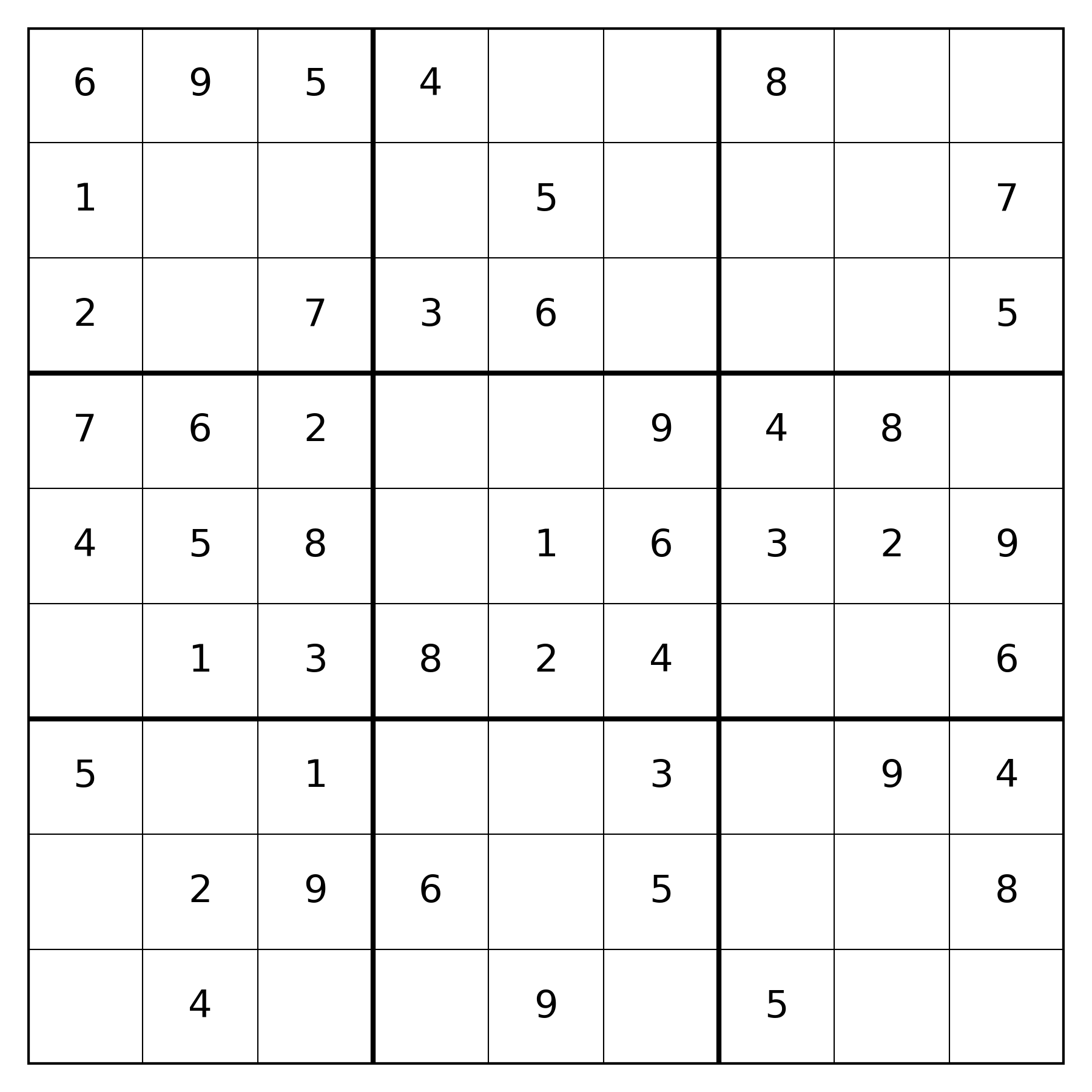

Make sure to read this before the exam - I recommend completing a practice test with it so you learn where everything is and can ask if you don’t understand something. I might’ve made mistakes! There’s a sudoku at the end in case you finish early, and the cheat sheet is generated based on analysis of past exams and given material.

Small warning: the following makes the most sense if you read it all first. It also assumes you have knowledge from the linear algebra module (namely finding a basis and simple row/column operations) - make sure you know these!

Possible Question Topics and their Explanations

1. Parameters of a Code

- Definition: A code is a subset , where is a -ary alphabet and each element of is a codeword.

- Parameters of a Code:

- A standard code is an -code, where:

- : Number of symbols in the alphabet (can be excluded if not important).

- : Length of each codeword.

- : Total number of codewords (). Can also be calculated with if linear.

- : Minimum Hamming distance ().

- A linear code is an -code, where:

- : Dimension of the code, equal to .

- A standard code is an -code, where:

2. Code Representation and Properties

- Code Matrix:

- A code can be represented as a matrix of codewords (a code matrix).

- Each row corresponds to a codeword.

- Code Equivalence:

- Two codes are equivalent if their matrices can be transformed into one another through row operations.

- Code Symmetry:

- A code is symmetric if its structure remains unchanged under matrix operations.

- This property simplifies analysis and decoding.

- Puncturing:

- Definition: Reducing code length by deleting symbols.

- Represented as .

- Example: If , then after puncturing the last symbol.

- Code Rate:

- Definition: The efficiency of the code/how quickly a code can transmit information.

- Calculated as the ratio of the number of information symbols (dimension) to the length of codewords : . For a dual code, we can write , since by definition.

- Probability of Errors (for binary codes, i.e. a symbol is correct or isn’t):

- Symbol Error Probability: the probability that a single symbol is received incorrectly, represented by .

- No Errors Probability: the probability that a codeword is received without any errors, .

- Cumulative Error Probability: the cumulative probability of having at most errors, .

- Expected Errors: expected number of errors in a codeword of length , assuming an average.

- Undetected Error Probability (linear code): the probability that an error occurs but isn’t detected, , where is the number of non-zero valid codewords of Hamming weight (the number of non-zero symbols) in .

- Decoding Success Probability: the probability that the decoder successfully identifies the transmitted codeword, , where is the number of coset leaders of Hamming weight (the number of non-zero symbols) in a standard array (easily found with a table for the standard array - just count!).

- Decoding Failure Probability: the probability that the decoder fails to correctly identify the transmitted codeword, .

Helpful information: Here are some common expansions of , calculated using the binomial expansion…

3. Hamming Distance

- Definition:

- The Hamming distance is the number of positions where symbols differ between two codewords.

- Example: For codewords and , the Hamming distance is .

- Minimum Hamming Distance:

- The minimum Hamming distance is the least distance between any two distinct codewords in the code.

- Used to determine error detection and correction capabilities.

- Distance Theorem:

- If is a code with minimum Hamming distance , then:

- If and , then detects errors.

- If and , then corrects errors.

- If is a code with minimum Hamming distance , then:

- Metric Space:

- The Hamming distance can form a metric space, satisfying:

- Non-negativity.

- Symmetry.

- Triangle inequality: .

- The Hamming distance can form a metric space, satisfying:

- Solid Hamming Distance Sphere:

- A solid sphere (in the aforementioned metric space) of radius around a codeword is defined as .

- Volume of the solid sphere is given by: .

- Sphere-Packing Bound Theorem:

- Relates the maximum number of codewords that can exist in a code without overlap: .

- Useful for proofs regarding code efficiency and error correction.

4. Perfect Codes

- Definition:

- An -code is perfect if it satisfies the following condition:

- If is even then the code can never be perfect, as will round down (away from perfect).

- Properties:

- Perfect codes achieve maximum efficiency in error detection and correction.

- They maximize the number of codewords that exist without overlap, ensuring that every possible received vector is either a codeword or within the error-correcting capability of the code. Hence, perfect codes have all unique coset leaders.

- Examples:

- The earliest examples of perfect codes are the original Hamming codes…

- Hamming codes are denoted as for some integer . They can detect up to errors and correct , since .

- They have notation .

- Another example is the Golay code, e.g. .

- The earliest examples of perfect codes are the original Hamming codes…

5. Linear Codes

- Definition:

- A linear code is a code where any linear combination of codewords is also a codeword, meaning it is closed under addition.

- Mathematically, this means linear codes must be a subspace, meaning it satisfies these three conditions (by the Quick Subspace Theorem):

- Contains the zero vector,

- Closed under vector addition,

- Closed under scalar multiplication.

- Alternatively, a linear code must form a solution set to a homogenous system of linear equations (since that is also a vector subspace), so if you can state this set then it must by a linear code.

- Generator Matrix:

- A linear code can be represented by a generator matrix .

- The rows of form a basis for the linear code.

- Codewords are generated by multiplying with information vectors: , where is a information vector.

- Parameters:

- Linear codes have parameters :

- : Length of codewords, found as the number of columns in .

- : Dimension of the code, found with (or the following Quick Subspace Theorem).

- : Minimum distance of the code, equal to the minimum number of vectors (from the columns of the parity-check matrix) linearly combined to give the zero vector (Linear Distance Theorem), or just manually calculated from the full code.

- Note: by the Singleton Bound, .

- The dimension can be found using the Quick Subspace Theorem:

- If is in row-echelon form, the number of non-zero rows gives (the dimension).

- This corresponds to the rank of the matrix.

- Cosets:

- Cosets are formed by adding a fixed codeword to all existing codewords.

- There exists cosets for a given code, where some may be not be unique (unless a perfect code).

- Each coset has a coset leader, which is the codeword with the smallest Hamming weight (number of non-zero symbols) - it also represents the most likely received word in the coset. Keep in mind that the zero word is the coset leader if present!

- A standard array is a method to organise codewords and their cosets. This process is called SADA.

- Compiling a standard array:

- Row 0: lists all codewords, starting with and then the others arbitrarily.

- Row 1: out of vectors not yet listed, choose of smallest weight (guaranteeing it’s a coset leader - minimal weight in the row). Fill in the rest of the row by adding to each codeword in row 0.

- Row 2: repeat process for row 1, still ensuring no repeated vectors and still adding to row 0.

- …

- Row : this is the final row, repeat the same process and then conclude.

- Note: often the coset leaders are something like for a binary code or for a ternary code, since they have weight 1. It’s not often the weight goes beyond 2.

- This simplifies finding the successful decoding probability to , where is the number of coset leaders of Hamming weight in a standard array (i.e. the number of non-zero symbols in each coset leader).

- Decoding using SADA: Simply find the codeword, then the decoded message is at the top of the same column, e.g. in the above example.

- Compiling a standard array:

- Cosets are formed by adding a fixed codeword to all existing codewords.

- Dual Codes:

- The dual code consists of all codewords orthogonal to the original code, such that they’re linear codes generated from the parity-check code matrix of its original code.

- If is an linear code, then has parameters , where:

- , since represents the remaining degrees of freedom in -dimensional space, by definition.

- , since it must detect at least one error and cannot have more parity symbols than the original code.

- The rate of is defined as , hence .

- Parity-Check Codes:

- Used to detect single-bit errors and sometimes correct them.

- Found using the relationship: .

- If the generator matrix is in the standard form: , then the parity-check matrix can be quickly found as: .

- Note: is the transpose, where rows become columns, and vice versa.

- In binary codes, the negative sign can be omitted, because of the modular arithmetic.

- Linear Distance Theorem: Can be used to find minimal distance by counting the minimum number of linearly dependent columns of the matrix (minimum number of columns that add to give the zero vector).

- Syndrome Decoding:

- Uses syndromes , where is a received vector. If , the vector is a valid codeword. Note that the syndrome is normally shorter than the vector.

- To decode, compile a table of syndromes…

- Row 0: the zero codeword and its syndrome .

- Row i: a vector of smallest weight such that hasn’t appeared in the table yet and its syndrome .

- Possible shortcut tricks are…

- … the syndrome of form , where is in the -th position, is the -th column of the parity-check matrix.

- … for two vectors , , , e.g. to find you can add .

- Possible shortcut tricks are…

- Should be complete at row .

- With this table, you can receive a vector and then calculate its syndrome .

- In the table, find such that , i.e. find the vector with the same syndrome; this is the coset leader of .

- The decoded codeword is simply the difference between the two vectors, .

- This is better than the standard array, since it uses more computation instead of memory.

NOTE: Remember that two multiply two matrices, the columns of the first matrix must equal the rows of the second! E.g. .

6. Designing Codes

- Cyclic Codes: A linear code where any cyclic shift of a codeword gives another codeword in code.

- Generator Polynomial: a polynomial to generate all codewords of a code - it must divide under . To find this…

- Decompose into irreducible factors over .

- Choose a combination of these factors to form such that the degree of is .

- Generator Matrix:

- Row 1: Coefficients of the generator polynomial, starting with coefficient (constant term) and then go up to .

- Following rows: The previous row shifted along by one, until the number of rows is equal to the degree of the generator polynomial.

- Properties:

- Can be generated by one non-zero codeword (this is the codeword composed of the coefficients of the generator matrix).

- , , .

- BCH Codes (useful to design a cyclic code with a set , with errors to correct, practically where , but theoretically ):

- A basic BCH code is cyclic with .

- It has parameters and .

- To construct, find :

- is an arbitrary prime number, but greater than .

- is found arbitrarily within and is the lower bound for the desired minimal distance.

- is found as the element in which can generate the entire alphabet with its powers, i.e. . This means it’s a primitive element: these are often small numbers, like 2 or 3 - just test manually!

- To test if an element is primitive, ensure that is not satisfied for any divisor of (other than and ). If you add one to each index of its prime factors, multiply them together, subtract two, then you’ll get the number of these.

- Generator Polynomial: a polynomial to generate all codewords of a code - it must divide under . To find this…

- Repetition Codes: Length repeats each bit times, e.g. length would convert .

- Generator Matrix: , where there are the same number of entries as . Always dimension and minimum distance .

- Solution Set: .

Simple Sudoku